Natural Intelligence

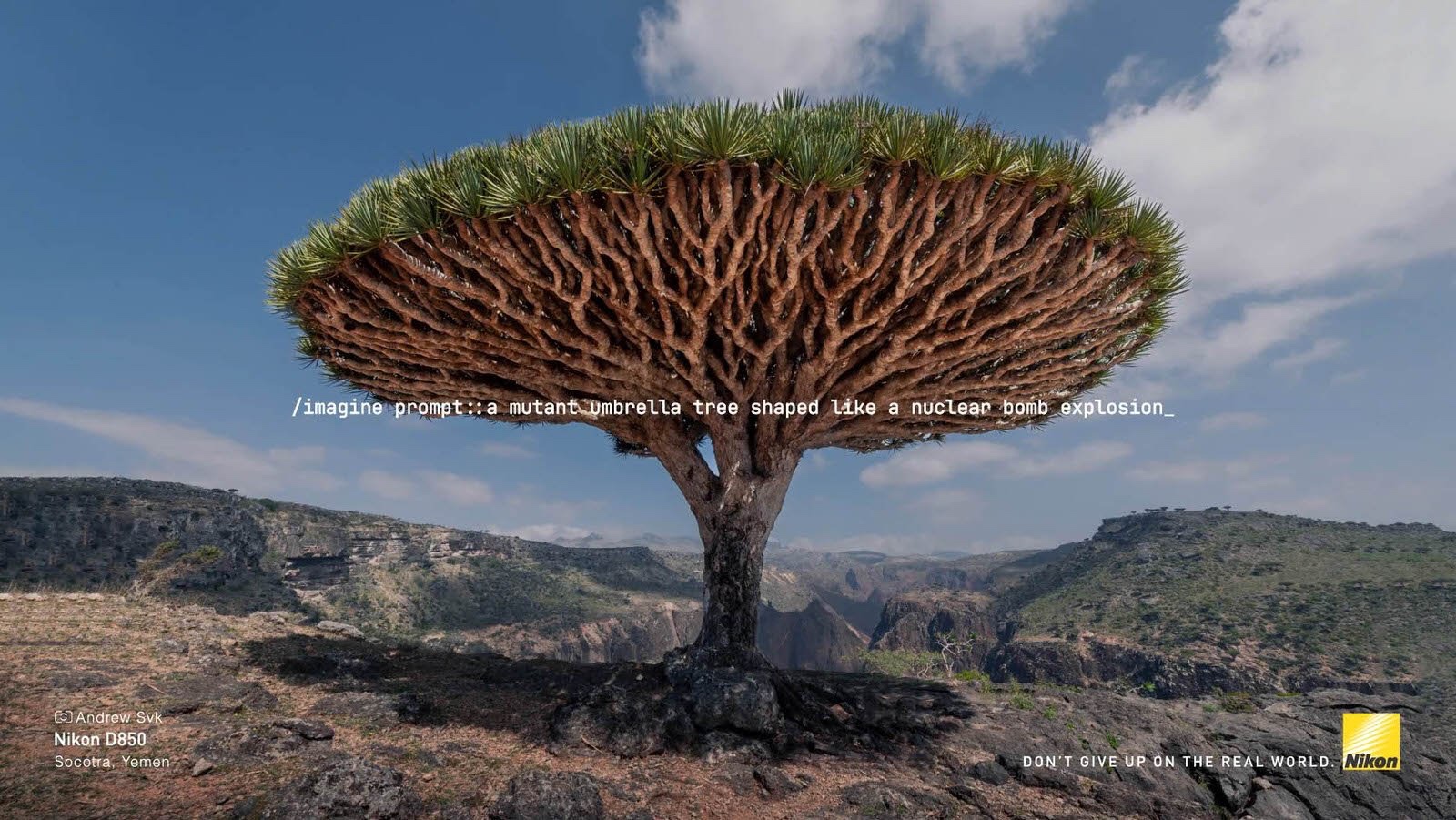

Nikon Peru has come up with a creative campaign to push back at AI generated imagery, according to Michael Zhang at PetaPixel.

There are several initiatives in progress regarding imagery created with artificial intelligence algorithms. Adobe has its Content Authenticity Initiative, which is working on technical solutions to image verification and there are a couple different groups who are working on developing the ethical standards and descriptors for these works.

In the journalism realm, we have trope about knowing your source and I think that’s a critical part of audience education. We can spend many hours discussing the ethical implications of AI generated works amongst ourselves and most journalists are going to come down hard on the not in my publication, ever side of the discussion. Your chances of seeing AI generated works from your major news publications – The New York Times, The Washington Post, the Associated Press, Agence France-Presse, Reuters and many others – are at just about zero.

But … that’s not where everyone gets their news. Whenever you’re presented with information, ask yourself these questions (as my late colleague Barry Hollander taught thousands of journalism students to do): What do they know? How do they know it? Should they know it? Why are they sharing it with you?

The Adobe project focuses on the idea of provenance – they want to allow a viewer to look into the history of an image, to see if it has been altered since it was signed, for lack of a better phrase, by the original author or publisher. Which is great – so long as we have an audience that both cares about image integrity and is willing to do the work to ensure the authenticity of an image.

But it’s the viewer I worry about most. In the constant barrage of visuals we are doused with every day, will we all be willing to do the work to determine what is real and what is generated? Will the publics, scattered around the world, be willing to have serious discussions about what is a photograph – meaning a visual created by light striking a surface and being recorded – and what is generated by prompts fed into a machine learning algorithm? Meaning that the “moment” presented never occurred, that there was no other witness because there was nothing to witness?

How many deceptions will people see without knowing they are being deceived? How may people will take advantage of our visual dousing to dose us with disinformation? That’s a conversation journalists need to be having, not just with other journalists but with the public.